From zero to Platform as a Service with Azure Web Apps

One command deploy with Bicep and Docker

Challenge

Our assumptions are that we will scale apps or APIs independently of each other, they can handle customer data of different confidentiality levels, and we target having at least one pre-production environment as similar as production.

Let’s start by having a look at how App Service plans are often designated

for a single app. We compare the monthly running costs of different environment

options, some of the development running on App Service Standard plan

while the production always runs in Premium.

We also straight up rule out App Service plans Shared and Basic as they

run in a shared environment, have a monthly hour limit and/or do not quarantee

SLA, which is not for the paying customers’ best.

All the prices (2021-08) below are for App Service Linux plan, being cheaper of the plans as the Windows plan adds the cost of operating system license.

In Premium, we get double the performance and useful additional features such as option to hide App Service from public internet altogether by using Private Endpoints.

Let’s then have a look at the yearly App Service costs when having three apps (or a single-page app using three APIs):

Ten scale the above:

This roughly means 25K difference between the cheapest and the most expensive option.

We have linear growth in all the presented cases so far.

Seems the expenses/savings in 30 apps are predictable? Hold on.

The above charts are the lowest tiers of the plans, S1 in Standard

and P1V2 in Premium. Bumping the tier up of a single App Service plan

doubles the VCPU and RAM the apps can use, effectively performance,

doubling the plan’s costs.

Logical.

Similarly, the price is doubled for each additional SKU (App Service host) added inside the tier for a particular plan. SKUs are often doubled/tripled (from one) to tackle issues with exceptions occurring on one host, in which case customers are served from a healthy App Service host without interruption.

Second region? Multiply by two.

As organizations are scaling up cloud computing at a pace never seen before, outcome of well placed optimizations will multiply the effect in overall costs, performance and reliability. This is especially prone to happen when the development culture has been grown to sharing practices between teams.

Resolution

With the above charts as a starting point, we decide to combine our non-production environments to a single App Service plan.

Moreover, we expect this to lead to greater efficiency in terms of QA, DevOps and security practices, purely by having 1/3 less Azure resources in question.

We decide to use Premium plan for both non-production and production to

minimize the variance in the environments from the start.

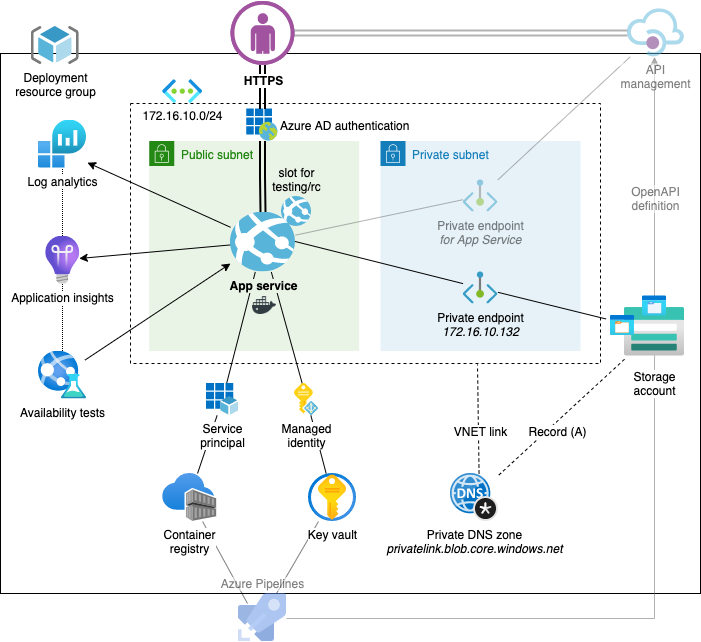

Thus an exactly similar PaaS is deployed for both, including:

- App Service with deployment slots, all environments behind Azure AD authentication

- Traffic, application and audit logging to Log Analytics Workspace

- Application Insights for real-time monitoring and alerts as push notifications to Azure mobile app

- Storage Account with a private endpoint (and private DNS) for persistent Docker volumes and long-term log storage

- Key Vault for secrets, App Service fetching them using a managed identity and over backbone network

- Azure Container Registry for hosting Docker images, App Service pulling them with a service principal

Using slots over plans

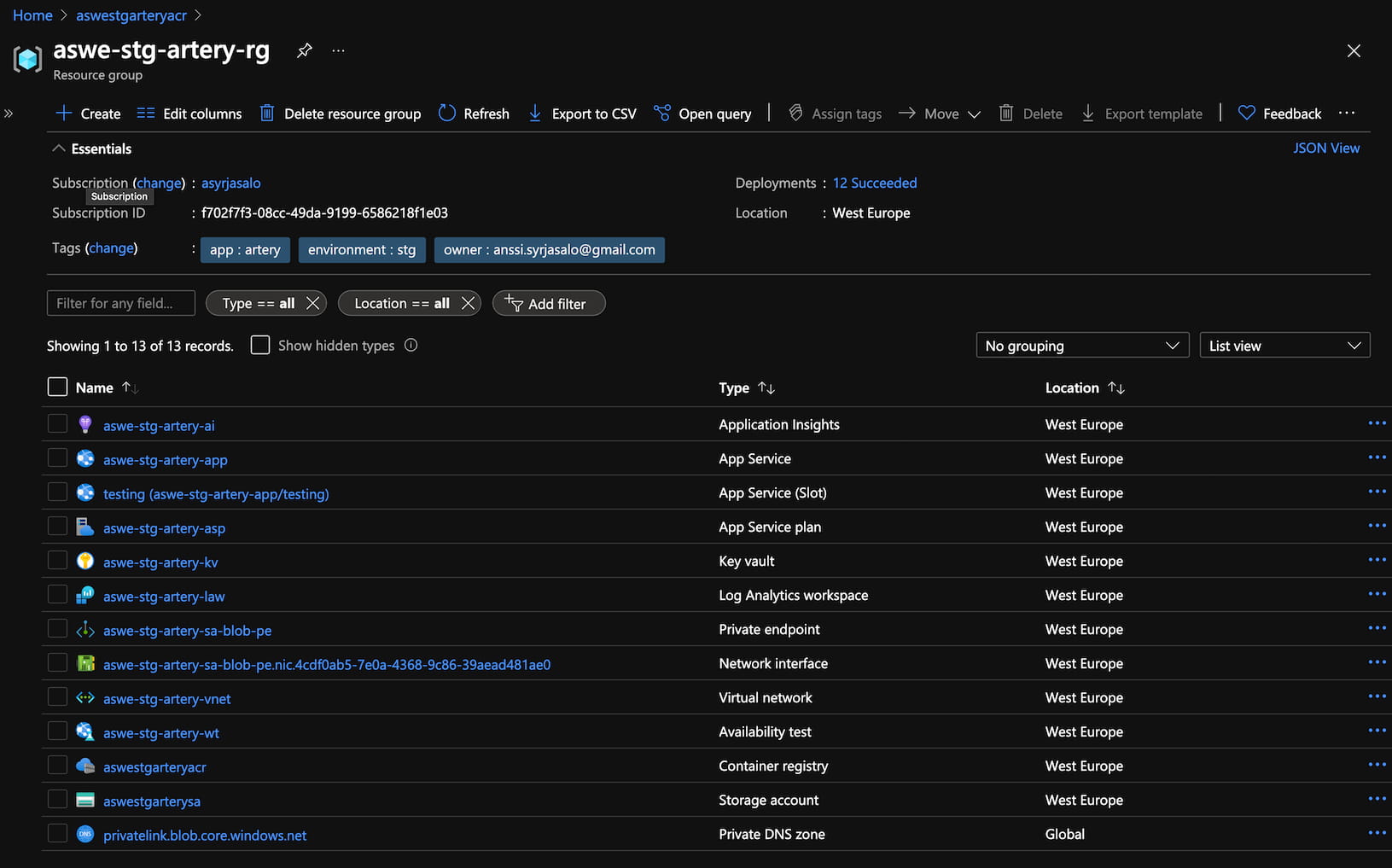

We will deploy two resource groups, one for non-productions (stg)

and one for production (prod). These will include our App Service

plans and the back office with monitoring, container registry, etc.

The stg resource group’s App Service Plan will host, besides staging,

an App Service deployment slot testing. The slot is used as any pre-staging

environment usually is, that is integrating the upcoming features and fixes

together in the mainline.

We assume staging is usually what is used for internal demoing purposes and has the nearest production-like data. Regarding testing, we recognize that it does not hurt to develop against production-like data from day one.

Going from testing to staging is as simple as switching the slot testing as

the current in the App Service. Note that after the swap, the current testing

ought to be redeployed to testing where the previous staging is now held,

unless there is a particular reason to keep the previous staging release.

Similarly, the production will include deployment slot rc which is dedicated

for any possible final checks that ought to be done (especially with production

data) before going live for customers.

Additionally, the rc slot can be used to implement “canary”, that is

redirecting e.g 5% of the customers from the current production to the rc,

allowing to pilot changes first with a small number of customers.

After swapping rc to production in AppService, the previous production is

held in slot rc so that if errors start occurring in production, the previous

production can be swapped back as fast as possible.

For this to work, database migrations ought to be already run in rc.

Sometimes errors only occur with production data and for debugging them rc

also happens to be (as a result) the most ideal.

Deploying to testing and rc, and swapping them to staging and prod, also

ensures zero-downtime deployment in App Service. This is preferable not only in

production, but also in staging as there is often internal demoing going on.

We will later introduce Azure DevOps pipeline to do the deployment and the swap, as well as implement an approval step before swapping the slots in production.

Creating Azure resources

Setup prerequisites

Clone the git repository:

git clone https://github.com/raas-dev/artery.git

cd artery/bicep

Azure CLI1, Bash and Docker2 are assumed present.

Install or upgrade Bicep3:

az bicep install

az bicep upgrade

One command deploy

If you want to create or upgrade the environment with one command,

copy stg.env.example to stg.env, configure variables and run:

./deploy stg.env

Deploy steps clarified

The steps that deploy runs are explained below. You may run the script as

part of the continuous deployment but note that some downtime can occur

depending of the tier of the App Service and the number of SKUs.

Export the variables:

set -a; source stg.env; set +a

Create a target resource group for deployment:

az group create \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--location "$AZ_LOCATION" \

--subscription "$AZ_SUBSCRIPTION_ID" \

--tags app="$AZ_NAME" environment="$AZ_ENVIRONMENT" owner="$AZ_OWNER"

Create an AAD app to be used for authentication on the App Service and the slot:

AZ_AAD_APP_CLIENT_SECRET="$(openssl rand -base64 32)"

az ad app create \

--display-name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-appr" \

--available-to-other-tenants false \

--homepage "https://$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app.azurewebsites.net" \

--reply-urls "https://$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app.azurewebsites.net/.auth/login/aad/callback" "https://$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app-$AZ_SLOT_POSTFIX.azurewebsites.net/.auth/login/aad/callback" \

--password "$AZ_AAD_APP_CLIENT_SECRET"

Fetch the AAD app’s client ID:

AZ_AAD_APP_CLIENT_ID="$(az ad app list \

--display-name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-appr" \

--query "[].appId" --output tsv)"

Create a service principal for the above AAD app:

az ad sp create --id "$AZ_AAD_APP_CLIENT_ID"

Next, ensure you have Azure AD role Cloud Application Administrator in order

to be able to grant User.Read permission to the App Service and the slot.

Permission User.Read is the minimum MS Graph API permission required for

App Service to implement authentication so that only members in your Azure

tenant will be able to access the app after AAD login.

If you do not have application administrator AAD role, you can either ask your Azure tenant Global Administrator to grant the role, or alternatively request consent the created AAD app on behalf of the organization.

Grant User.Read on AAD Graph API to the App Service’s AAD app:

az ad app permission add \

--id "$AZ_AAD_APP_CLIENT_ID" \

--api "00000002-0000-0000-c000-000000000000" \

--api-permissions "311a71cc-e848-46a1-bdf8-97ff7156d8e6=Scope"

az ad app permission grant \

--id "$AZ_AAD_APP_CLIENT_ID" \

--api "00000002-0000-0000-c000-000000000000"

Next, we will create a dedicated service principal for App Service to be used for pulling Docker images from Azure Container Registry on deployment.

This is the second best option, as creating and using App Service’s managed identity unfortunately does not work4 for container registry operations.

Nevertheless, using a service principal is better option than leaving the ACR admin account enabled and using admin credentials just for pulling images from the registry on deploy.

Create a service principal for App Service (and slot) to pull images from ACR:

AZ_ACR_SP_PASSWORD="$(az ad sp create-for-rbac \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-sp" \

--skip-assignment \

--only-show-errors \

--query password --output tsv)"

AZ_ACR_SP_CLIENT_ID="$(az ad sp list \

--display-name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-sp" \

--only-show-errors \

--query "[].appId" --output tsv)"

AZ_ACR_SP_OBJECT_ID="$(az ad sp list \

--display-name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-sp" \

--only-show-errors \

--query "[].objectId" --output tsv)"

Finally, create a deployment in the resource group with Bicep:

az deployment group create \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID" \

--template-file main.bicep \

-p prefix="$AZ_PREFIX" \

-p name="$AZ_NAME" \

-p environment="$AZ_ENVIRONMENT" \

-p owner="$AZ_OWNER" \

-p app_slot_postfix="$AZ_SLOT_POSTFIX" \

-p acr_sp_client_id="$AZ_ACR_SP_CLIENT_ID" \

-p acr_sp_object_id="$AZ_ACR_SP_OBJECT_ID" \

-p acr_sp_password="$AZ_ACR_SP_PASSWORD" \

-p aad_app_client_id="$AZ_AAD_APP_CLIENT_ID" \

-p aad_app_client_secret="$AZ_AAD_APP_CLIENT_SECRET"

After the deployment finishes successfully, the rg content is as following:

Now going to App Service or the testing slot’s URL, you will see the following:

Next, we will deploy the app with Docker.

From Docker to App Service

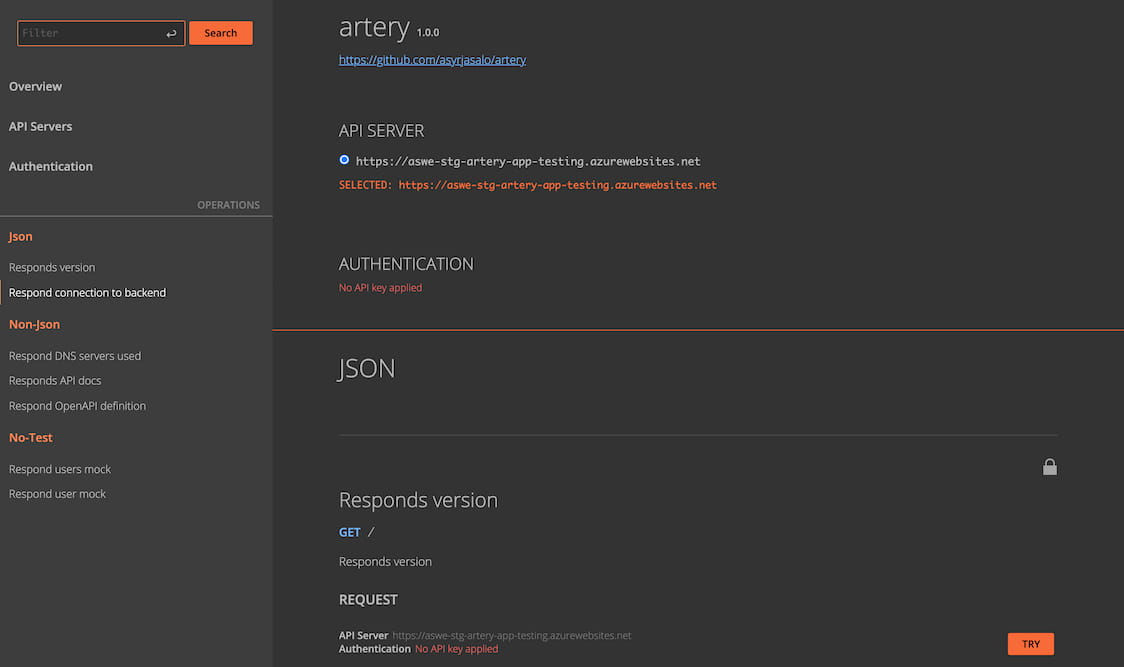

We will use an example API implemented in TypeScript and Express.js, running on Node.js in Docker container.

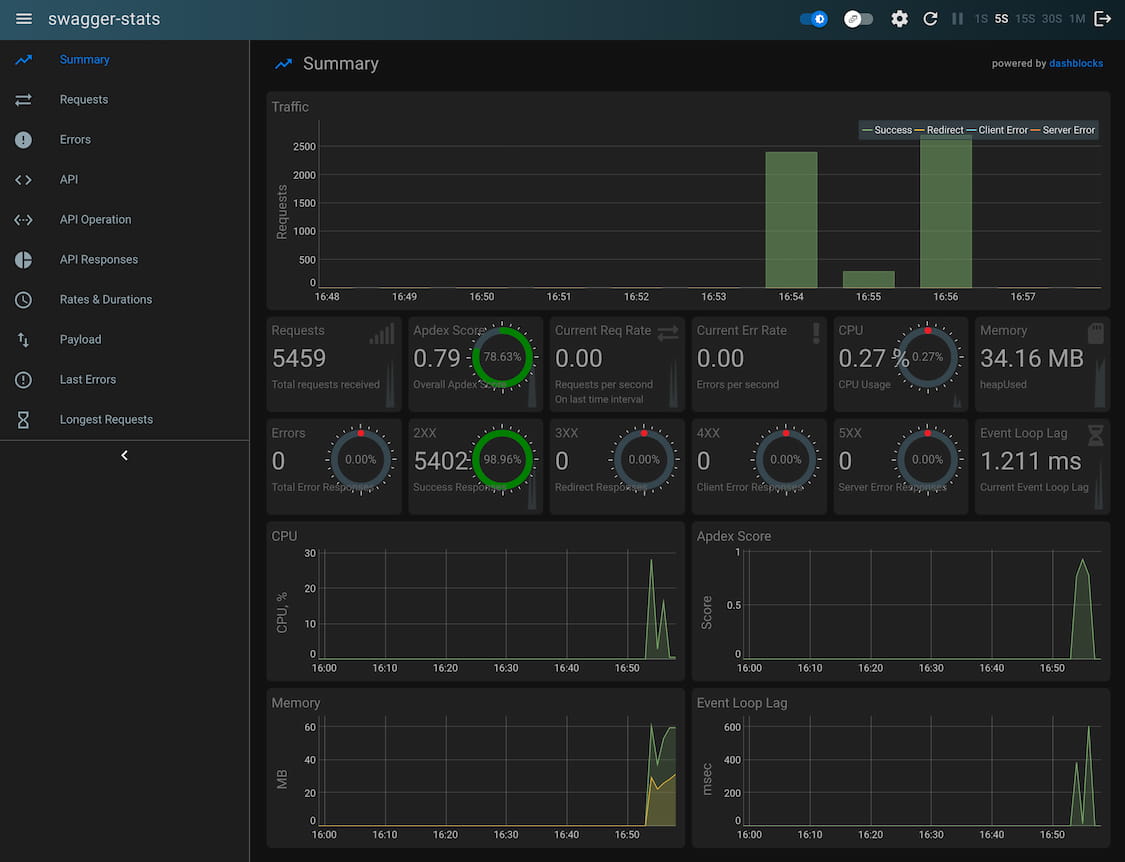

The API implements endpoints for demonstarting private endpoint connectivity, an example of hosting interactive API documentation powered by RapiDoc5, Express middleware swagger-stats6 providing real-time statistics in web UI, and express-openapi-validator7 middleware for routing and validating the HTTP request parameters based on the OpenAPI/Swagger definition.

If you want to deploy a more sophisticated solution for verifying connectivity to the private endpoint fronted services, I recommend to take a look at the Janne Mattila’s webapp-network-tester8.

Deploy to the slot

Go back to the repository root:

cd ..

Build Docker image (and run a container from it, printing the Node.js version):

IMAGE_KIND="alpine" \

BUILD_ARGS="--pull --no-cache" \

docker/build_and_test_image node --version

Login to your Azure Container Registry:

az acr login --name "${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}acr"

Push the Docker image to the registry:

REGISTRY_FQDN="${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}acr.azurecr.io" \

docker/tag_and_push_image

Create a container from the image in the App Service slot:

az webapp config container set \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID" \

--docker-registry-server-url "https://${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}acr.azurecr.io" \

--docker-custom-image-name "${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}acr.azurecr.io/$AZ_NAME:main"

Wait for the slot to restart or restart it immeadiately:

az webapp restart \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID"

You can ignore the warning regarding the registry credentials, as username and password are read from the Key Vault by the App Service’s service principal.

Browse to https://APP_SERVICE_SLOT_URL/docs, authenticate with your Azure AD

account (if not already logged in) and you will see the API docs:

Swap the slots

After experimenting with the API, swap the testing slot in the App Service:

az webapp deployment slot swap \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID"

Note that after the swap, the previous staging is now at the testing slot. You may redeploy to the testing slot to have the testing up-to-date for the team to continue work on:

az webapp config container set \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID" \

--docker-registry-server-url "https://${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}acr.azurecr.io" \

--docker-custom-image-name "${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}acr.azurecr.io/$AZ_NAME:main"

az webapp restart \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID"

Real-time endpoint stats

Set swagger-stats username and password in the App Service slot application settings:

az webapp config appsettings set \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID" \

--settings SWAGGER_STATS_USERNAME=main \

SWAGGER_STATS_PASSWORD="$(git rev-parse HEAD)" \

PRIVATE_BACKEND_URL="https://${AZ_PREFIX//-/}${AZ_ENVIRONMENT//-/}${AZ_NAME//-/}sa.blob.core.windows.net/public/openapi.yaml"

az webapp restart \

--name "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-app" \

--slot "$AZ_SLOT_POSTFIX" \

--resource-group "$AZ_PREFIX-$AZ_ENVIRONMENT-$AZ_NAME-rg" \

--subscription "$AZ_SUBSCRIPTION_ID"

Go to https://APP_SERVICE_SLOT_URL/stats and you will be presented with a

login form.

Note that login form is not related to the Azure AD authentication, even though all the endpoints are behind AD authwall in any case.

Use main as the username and SHA of the last git commit as the password

to log in.

Operational best practices

Monitoring

The back office was created as part of the PaaS deployment and is best experimented from the Azure Portal:

- Application Insights metrics which are streamed by the Express.js middleware

- Querying HTTP and audit logs in the Log Analytics Workspace

- Alerts for suddenly increased response time and decreased availability (SLA)

- Availability (web) tests ran for App Service from all the regions in the world

By default, the alerts are sent to Azure mobile app as push notifications,

recipient specified by the email configured as $AZ_OWNER. If not using

the mobile app, you may want to configure alternative ways of getting alerted

in ai.bicep.

Service Endpoints

The public subnet is delegated for the App Service and all the possible

service endpoints are already enabled in the subnet, allowing to restrict the

inbound traffic in the subnet e.g. as done on the Key Vault side.

Traffic over service endpoints traverses in Azure backbone, although the target services continue to expose their public IPs. Regardless whether services are publicly or privately exposed, authentication is enforced except for blobs in “public” container of the Storage Account.

Azure Container Registry ought to be kept publicly reachable as it is required (for Azure Pipelines) to build and push Docker images to the registry. The ACR admin user is disabled and ought not be enabled, instead managed identities, or when they are not possible, service principals shall be used.

Private Endpoints

The general guideline advocated by the reference is to use private endpoints for

all storage and databases App Service accesses underneath to fetch data from.

The private endpoints ought to be deployed in private subnet of the virtual

network, similarly as done for the Storage Account (sa.bicep) in main.bicep.

Browse to https://APP_SERVICE_SLOT_URL/to-backend to witness that the

DNS query from App Service to the Storage Account resolves to a private IP.

The URL of the Storage Account is taken from App Service application setting

PRIVATE_BACKEND_URL set earlier.

You can ignore the error message regarding the missing file, or alternatively

download the OpenAPI definition from endpoint

https://APP_SERVICE_SLOT_URL/spec and upload it to the Storage Account’s

public container with name openapi.yaml to get rid of the message.

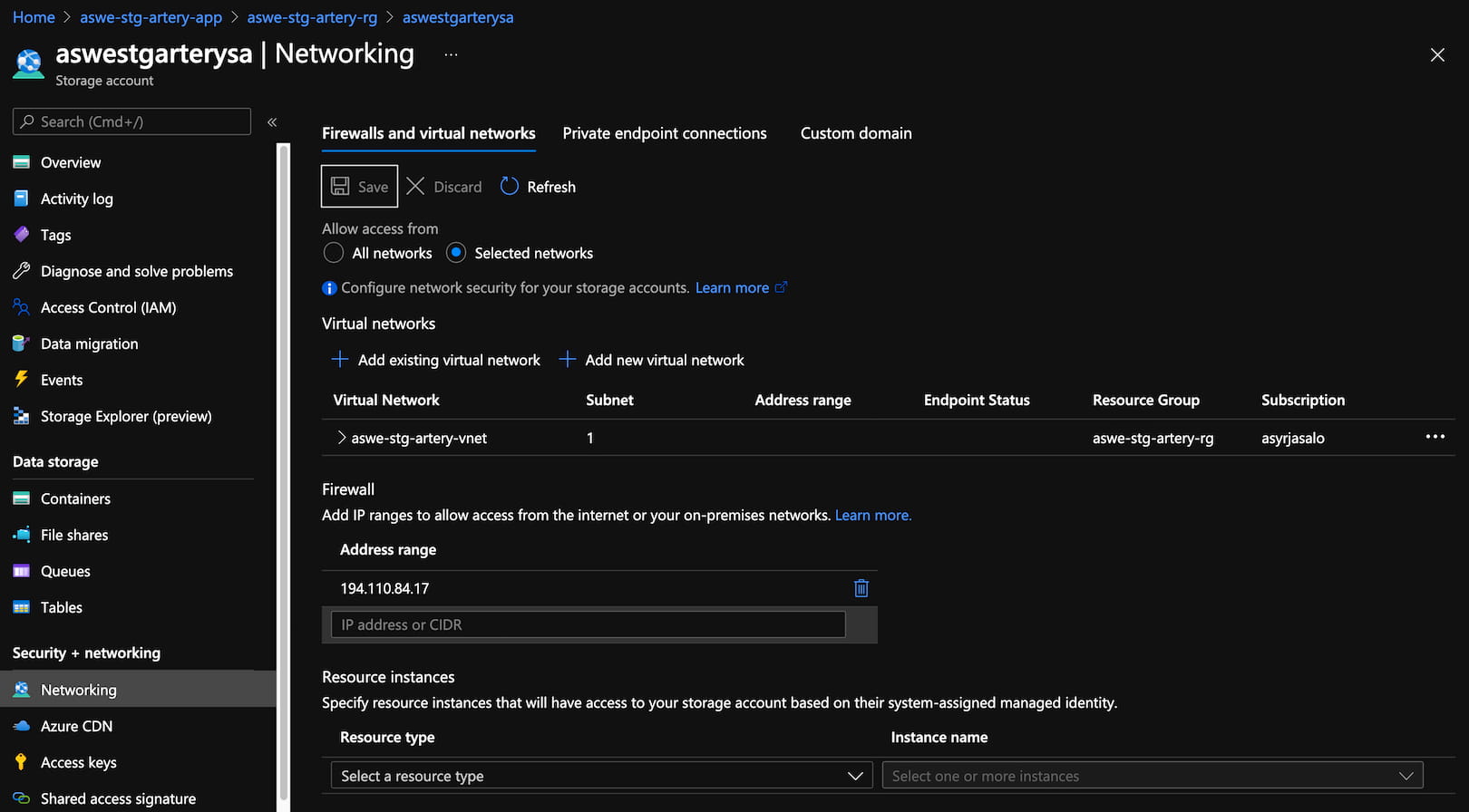

Note that you have to add your own IP as allowed in the Storage Account’s Networking to be able to upload files over the public network:

The definition file will be later used for importing the API in Azure API Management. Note that there is nothing secret in the in the OpenAPI definition itself (as the name hints :) as it is widely used for telling the client apps how to programmatically connect to the API.

Besides storage services and databases, private endpoints can also be created in front of both App Service and App Service slots. Note that this requires running on a Premium-tier App Service plan, which is usually the case in production, but might not hurt in non-prod either now due to fewer environments.

While this effectively hides the App Service from public Internet entirely, which is most likely welcome in production mission-critical systems (over purely restricting the inbound traffic with the App Service’s network rules), there are currently a couple of limitations to be aware of:

- Azure availability monitoring stops working as there is no public endpoint anymore exposed by the App Service to run the tests for

- Deploying to AppService from Azure DevOps will not work unless running a self-hosted agent in a virtual network, the virtual network then peered with the App Service’s virtual network.

While these are resolvable, especially the latter introduces maintenance of

CI/CD agents not in the scope of this tutorial. The App Service specific

private endpoints are left as a reference in main.bicep albeit commented out.

Exercise: Function Apps

As Function Apps are essentially backed by the App Service technology, this

reference can be used to deploy Function Apps instead with minor changes to

parameters for app.bicep.

Hint: In addition, you have to set App Service application setting

FUNCTIONS_EXTENSION_VERSION to runtime version, e.g. ~3.

Creating production is done similarly, except copy prod.env.example to

prod.env and after configuring variables, run:

./deploy prod.env

What’s next?

In the next part, we will walk through creating an Azure DevOps pipelines to update the Azure resources, deploy Docker images to Azure Container Registry, get App Service to pull them and swap the slots after approval.

We will also create a pipeline for importing/updating the API in existing

Azure API Management based on openapi.yaml, similarly as we did with

Azure Container Instances in the last post9, but this time

we will add creating the Azure DevOps project and the pipelines programmatically.

Feel free to experiment with the template in GitHub10 including more goodies covered later in the series. I hope you link to this tutorial/series if you use the template so we can continue to educate more developers.

Until the next time.

Have fun. □